티스토리 뷰

1. Preprocess SLAM left-right camera images

https://github.com/hongsukchoi/generic_tools/blob/master/aria_stereo.py

Undistort and rotate images

def get_undistorted_rotated_image(stream_id, frame_idx, src_calib, dst_calib):

raw_image = provider.get_image_data_by_index(stream_id, frame_idx)[0].to_numpy_array()

undistorted_image = calibration.distort_by_calibration(raw_image, dst_calib, src_calib)

# Rotated image by CW90 degrees

rotated_image = np.rot90(undistorted_image, k=3)

# Get rotated image calibration

pinhole_cw90 = calibration.rotate_camera_calib_cw90deg(dst_calib)

return rotated_image, pinhole_cw90To understand why and how I do this:

https://facebookresearch.github.io/projectaria_tools/docs/tech_spec/hardware_spec

Hardware Specifications | Project Aria Docs

Project Aria glasses have five cameras (two Mono Scene, one RGB, and two Eye Tracking cameras) as well as non-visual sensors (two IMUs, magnetometer, barometer, GPS, Wi-Fi beacon, Bluetooth beacon and Microphones). Mono Scene Cameras are often used to supp

facebookresearch.github.io

Image Utilities (Python and C++) | Project Aria Docs

Overview

facebookresearch.github.io

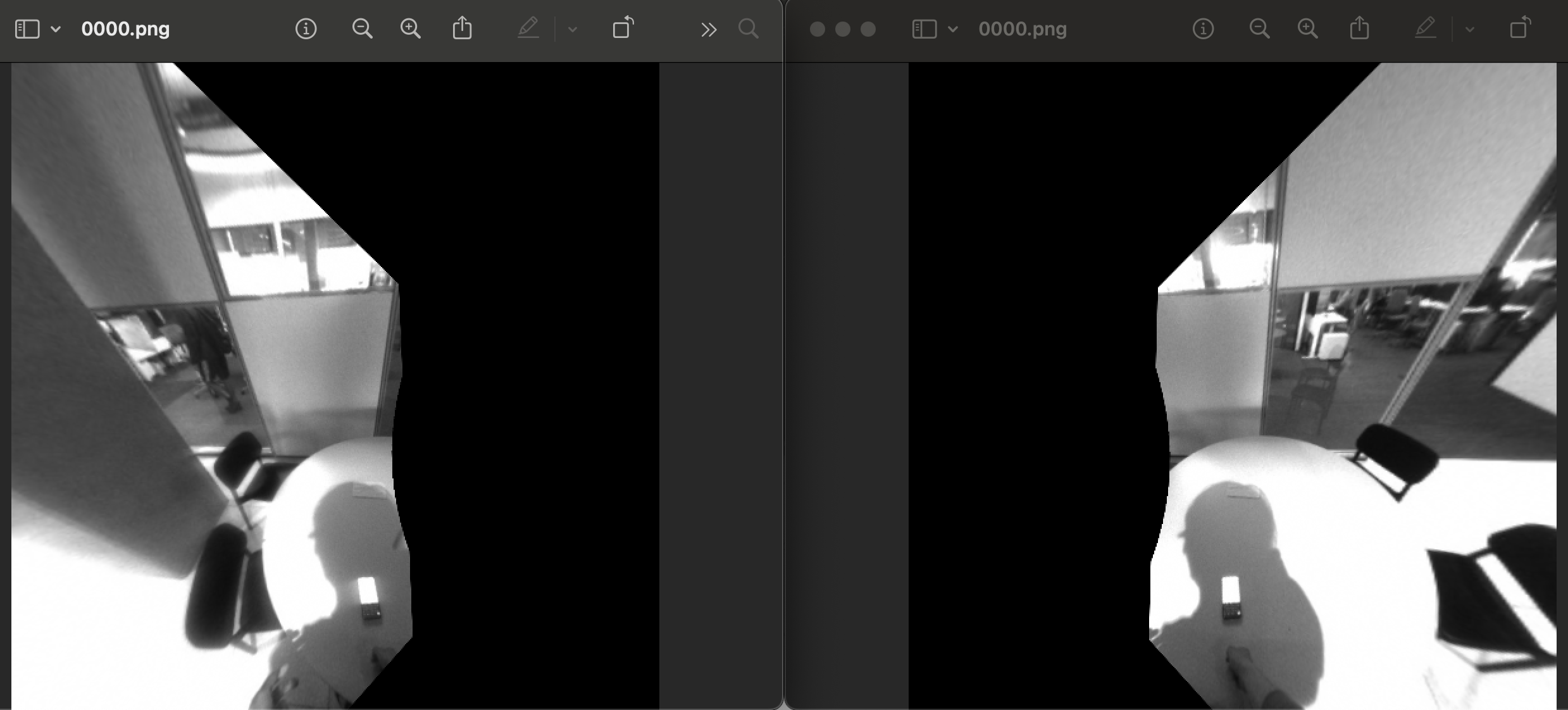

Rectify the SLAM right-left camera images using the new calibration data

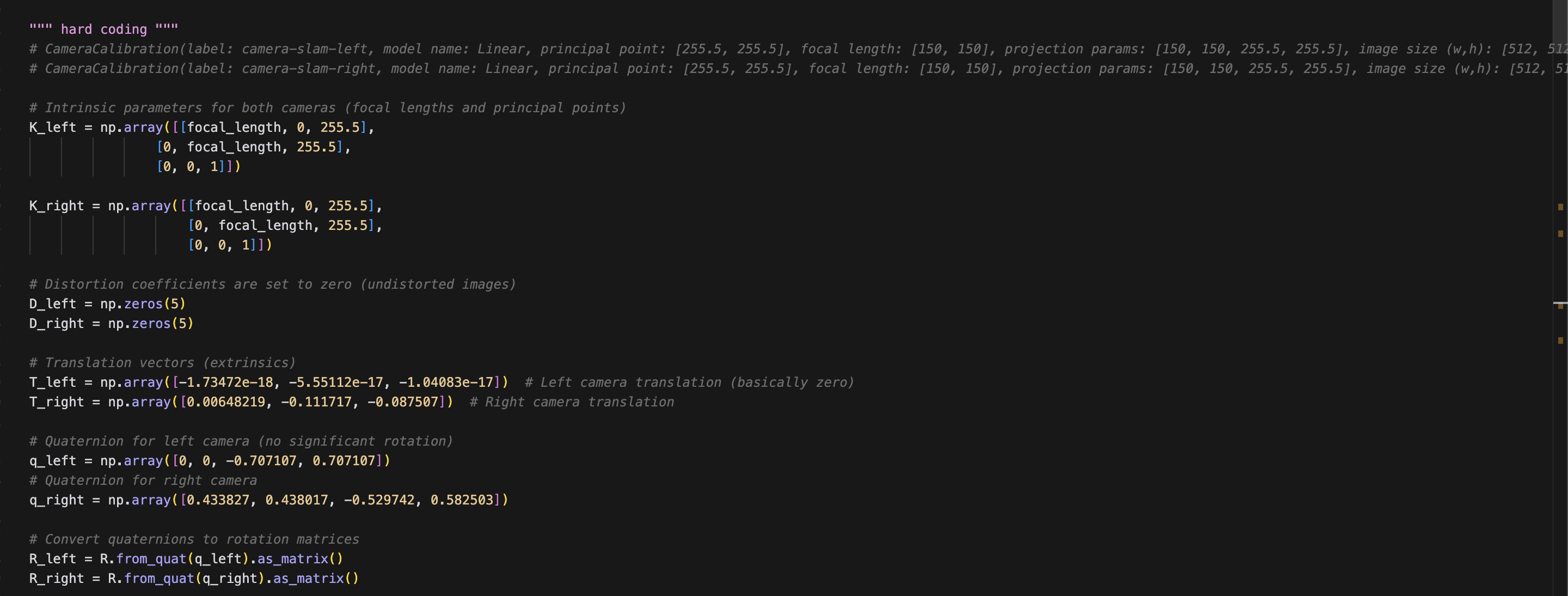

I just saved and hard coded the camera info (printed pinhole_cw90 in cli) like this:

Note that the rotations are the matrix of camera axes in world frame; In other words, for example, R_left is the left_cam2world rotation or R_{world left_cam} that transforms a 3D point in the left camera frame ot the world frame when multiplied from the left.

Rectify with OpenCV functions

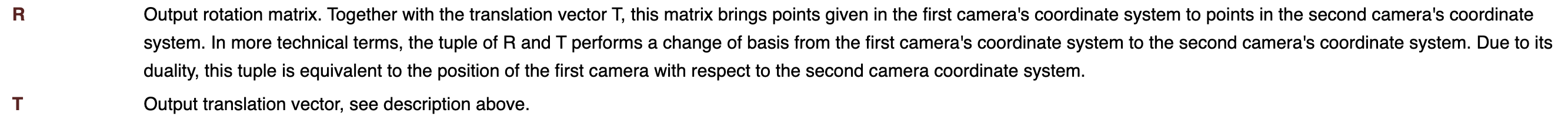

Becareful about how OpenCV function assumes about the inputs:

https://docs.opencv.org/3.4/d9/d0c/group__calib3d.html#ga617b1685d4059c6040827800e72ad2b6

https://docs.opencv.org/3.4/d9/d0c/group__calib3d.html#ga91018d80e2a93ade37539f01e6f07de5

OpenCV: Camera Calibration and 3D Reconstruction

enum { cv::LMEDS = 4, cv::RANSAC = 8, cv::RHO = 16 } type of the robust estimation algorithm More... enum { cv::CALIB_CB_ADAPTIVE_THRESH = 1, cv::CALIB_CB_NORMALIZE_IMAGE = 2, cv::CALIB_CB_FILTER_QUADS = 4, cv::CALIB_CB_

docs.opencv.org

Look how StereoCalibrate assumes about its input R and T (output from stereoRectify)

(Optional, not correct) Get overlapping regions

# get mask from the original image / black area is the invalid area

mask_left = (rectified_left != 0).astype(np.uint8)

mask_right = (rectified_right != 0).astype(np.uint8)

# Find overlapping region by logical AND

overlap_mask = mask_left & mask_right

new_mask = overlap_mask

# convert the new_mask to 0 and 1, and save it as numpy

new_mask = new_mask.astype(np.uint8)

np.save(os.path.join(save_dir, f'valid_mask.npy'), new_mask)

# visualize the new_mask

cv2.imwrite(os.path.join(save_dir, f'valid_mask.png'), new_mask*255)

2. Estimate disparity using RAFT-Stereo

Install raft-stereo from my version, modified from Justin's: pip install git+https://github.com/hongsukchoi/RAFT-Stereo

Before running, you need to save Q from the above setup to get depth, btw

Run: https://github.com/hongsukchoi/generic_tools/blob/master/raft_stereo.py

Vis: https://github.com/hongsukchoi/generic_tools/blob/master/vis_depth.py

It's tricky to normalize a video for good visualization.

Now working on rgb-slam left camera, but somehow it's not working well :( - Oct. 3, 2024 쯤에 하다 말음

'Research (연구 관련)' 카테고리의 다른 글

| mmcv installation (0) | 2024.10.21 |

|---|---|

| Visualizing multiple people in the same world frame (0) | 2024.10.13 |

| Retargeting human hands to robot hands (0) | 2024.09.30 |

| Human Hand Function: Conclusion (0) | 2024.09.14 |

| What is prehension? - Noriko Kamakura (0) | 2024.09.14 |

- Total

- Today

- Yesterday

- nerf

- Docker

- 헬스

- 머신러닝

- 컴퓨터비젼

- Interview

- focal length

- demo

- Machine Learning

- 에디톨로지

- Transformation

- pytorch

- 컴퓨터비전

- nohup

- 2d pose

- 문경식

- deep learning

- spin

- densepose

- 비전

- Generative model

- 인터뷰

- part segmentation

- Pose2Mesh

- Virtual Camera

- 피트니스

- camera coordinate

- pyrender

- VAE

- world coordinate

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |