티스토리 뷰

Jitendra lunch group meeting; source: (Israel / The Technion)

Probability Theory 101

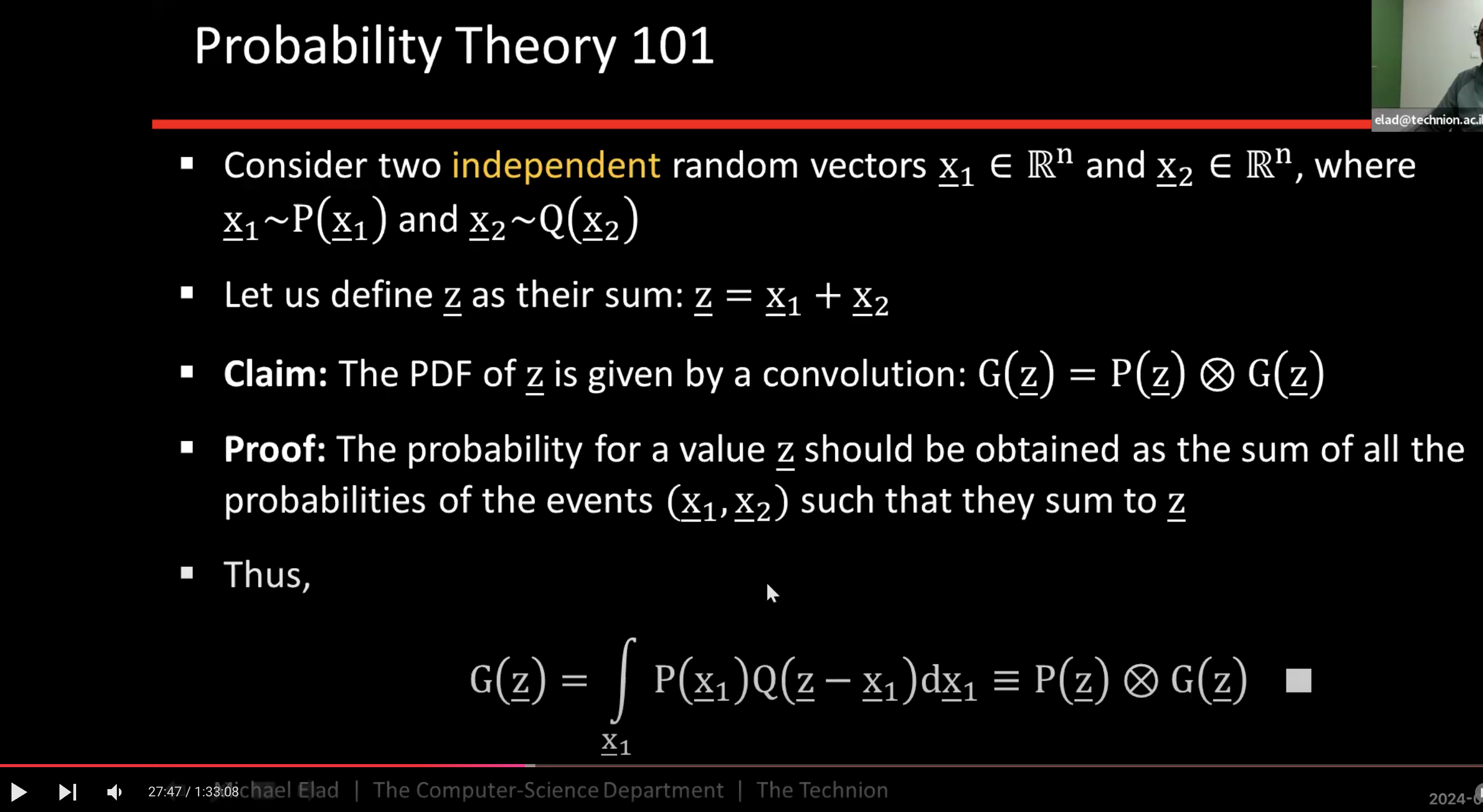

What is the "convolution property of a PDF (probability density function)"?

For two independent random variables, the PDF of their sum is the convolution of their individual PDFs.

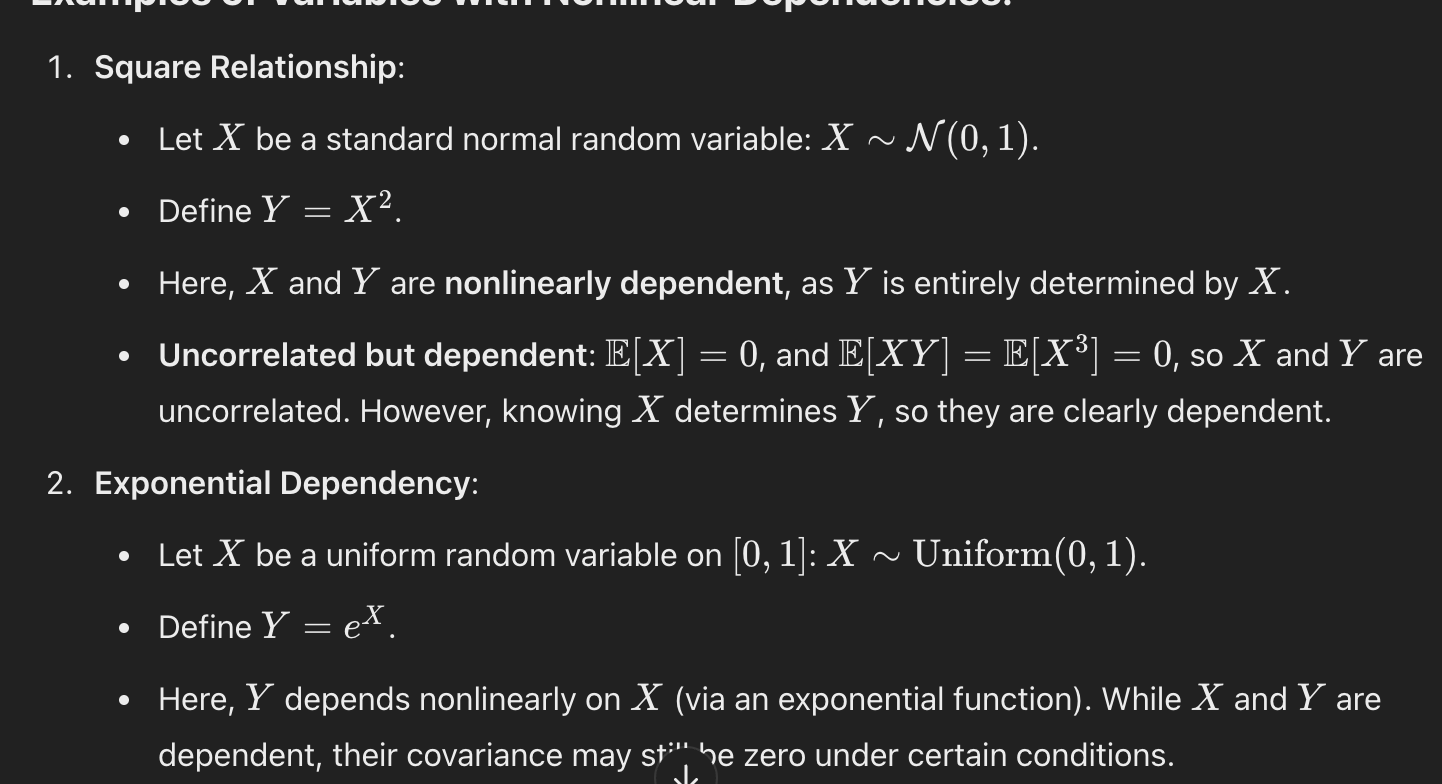

What’s the difference between "uncorrelation" and "independence" in expectation?

- x1 and x2 are uncorrelated if and only if the expectation of their dot product equals the dot product of their expectations.

- If x1 and x2 are independent, then the expectation of their dot product necessarily equals the dot product of their expectations. However, the converse is not true; uncorrelation does not imply independence.

- What is the exception? Gaussian random variables. Two Gaussian random variables can be uncorrelated, but dependent.

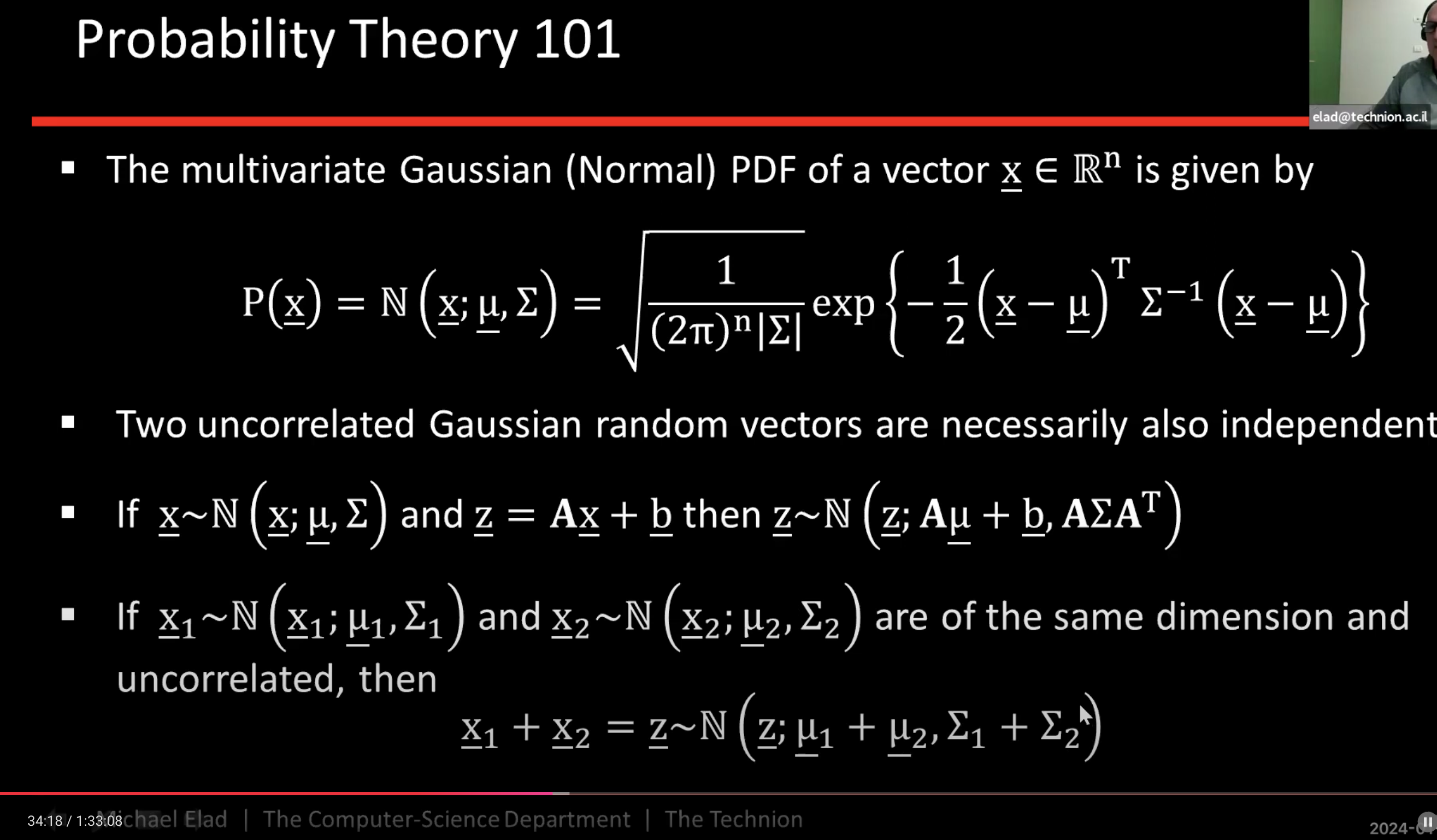

Is Convolution of Gaussian and Guassian is Gaussian?

Yes.

Information Theory 101

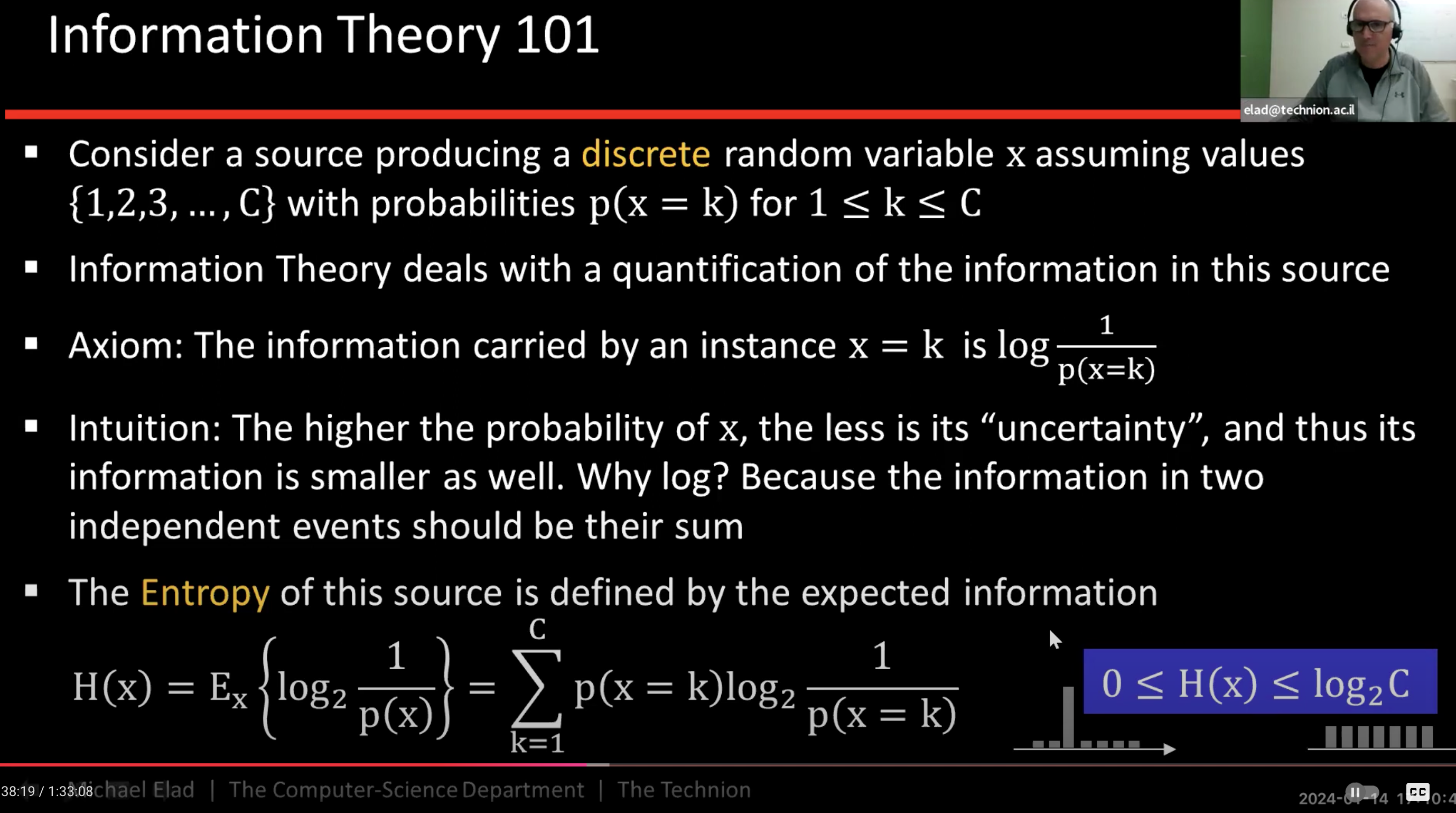

Why is information defined using logarithms? What is the difference between entropy and information?

Information is defined using logarithms to ensure that the total information from two independent events can be expressed as a sum. The difference between entropy and information is that entropy is the expected value (or average) of information.

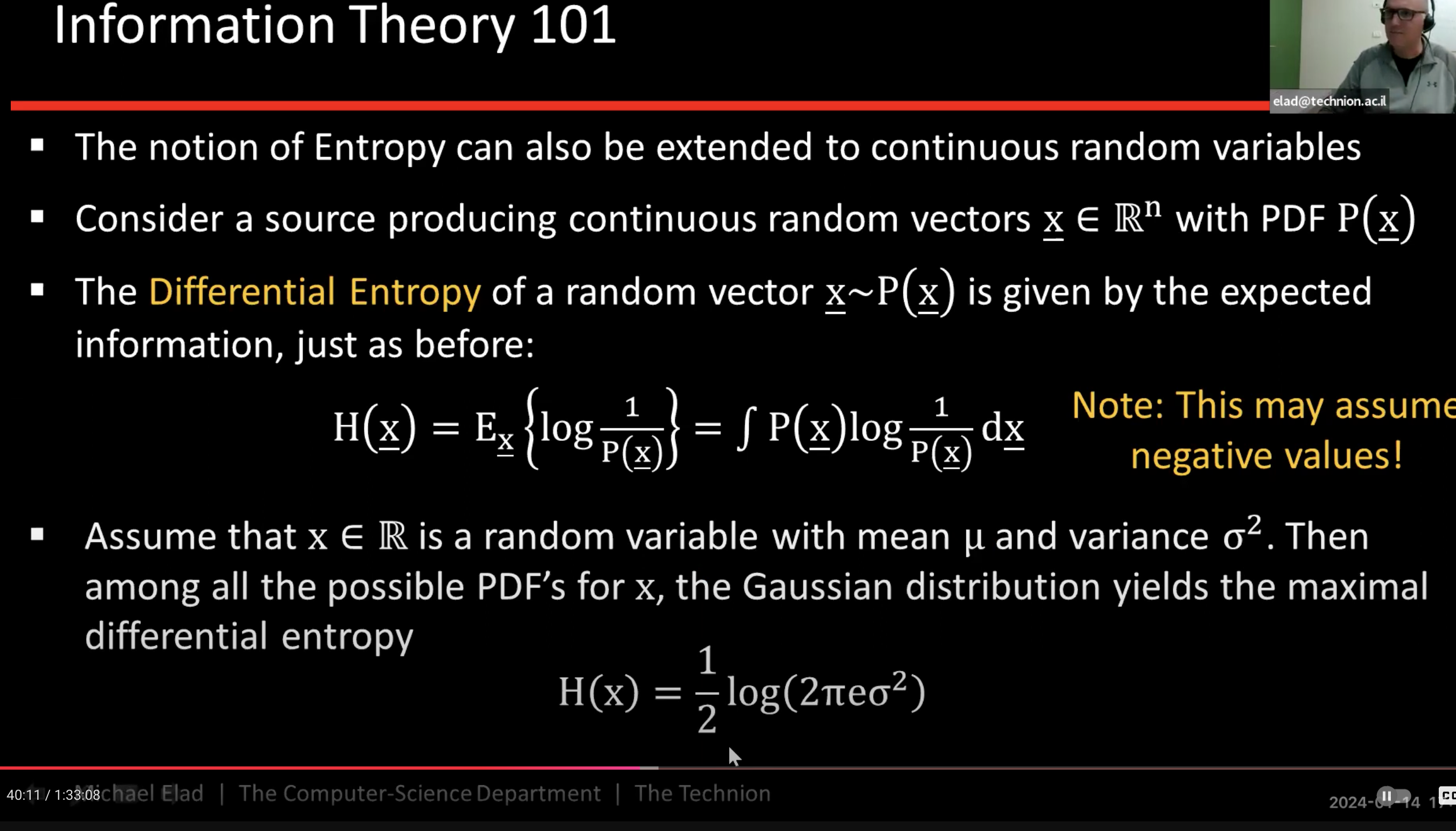

What is the differential entropy and can it be a negative value?

Differential entropy is the continuous counterpart of the discrete Shannon entropy and is used to quantify the uncertainty or randomness of a continuous probability distribution.

The concepts of a probability density function (PDF) being greater than 1 and negative entropy arise in the context of continuous random variables and differential entropy, and they are often sources of confusion.

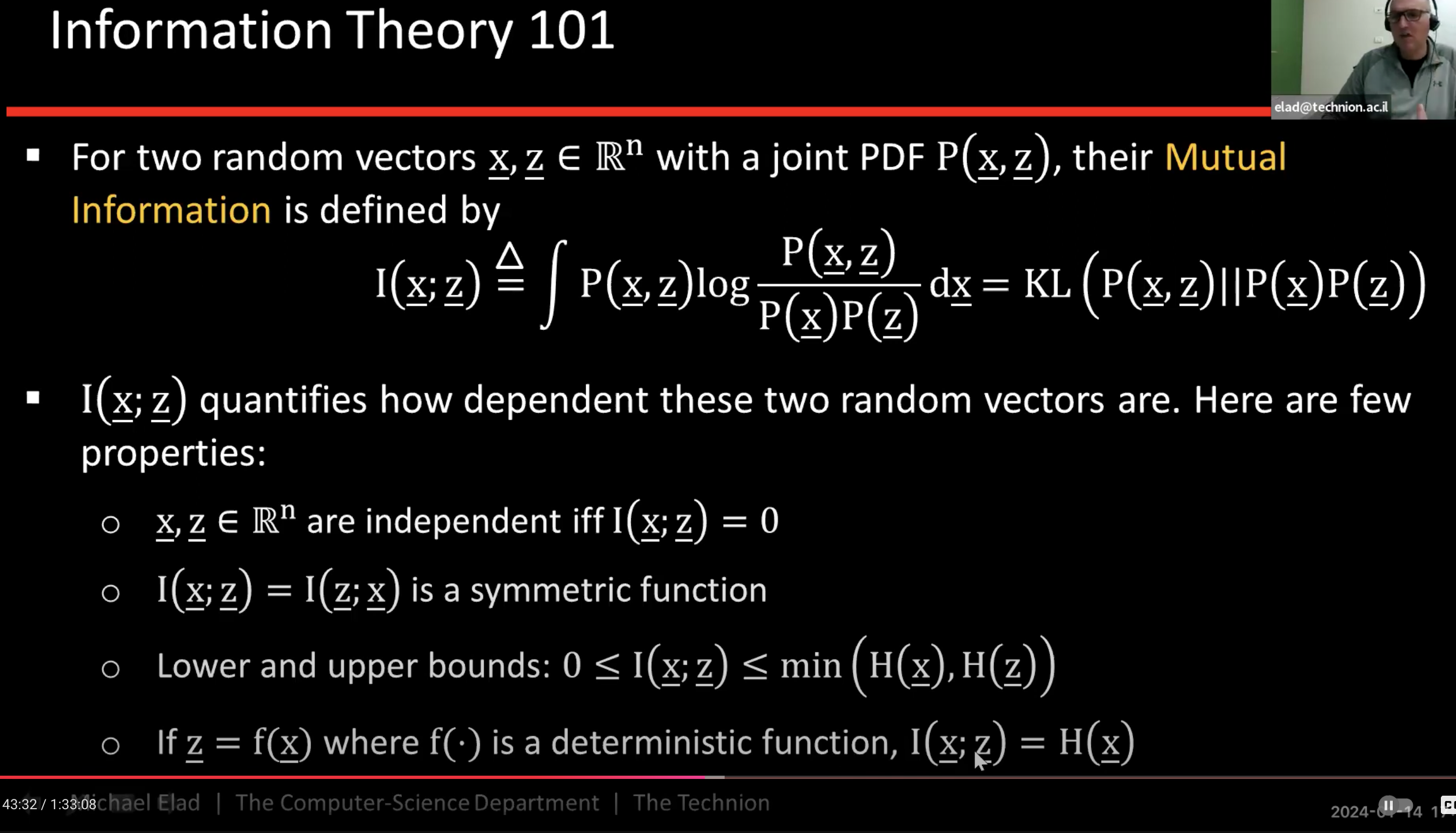

What is mutual information?

It measures the distance between when the two distributions were dependent and independent.

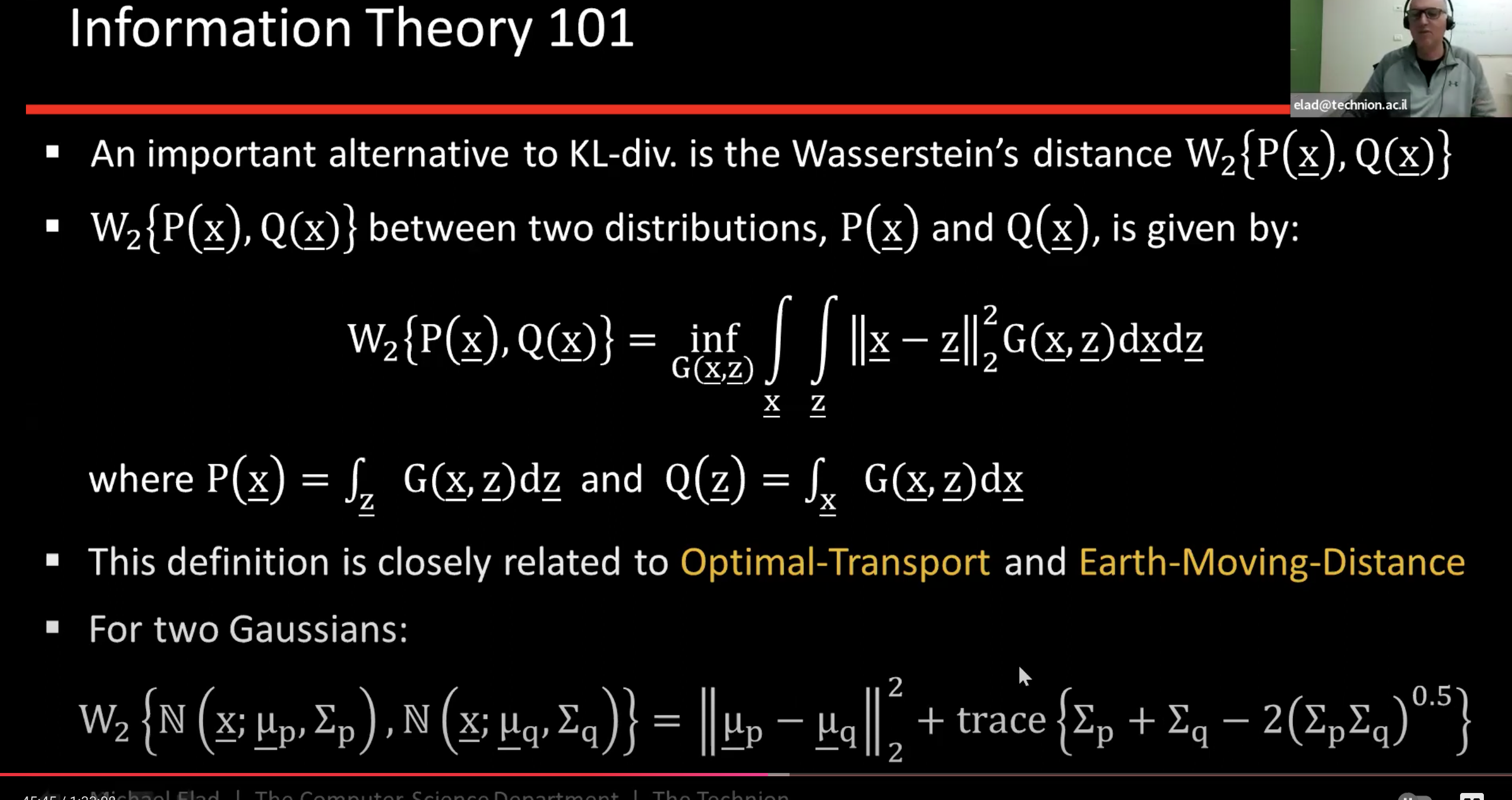

What is Wassertian Distance?

Background of Diffusion models

mostly about why knowing P(x) is important

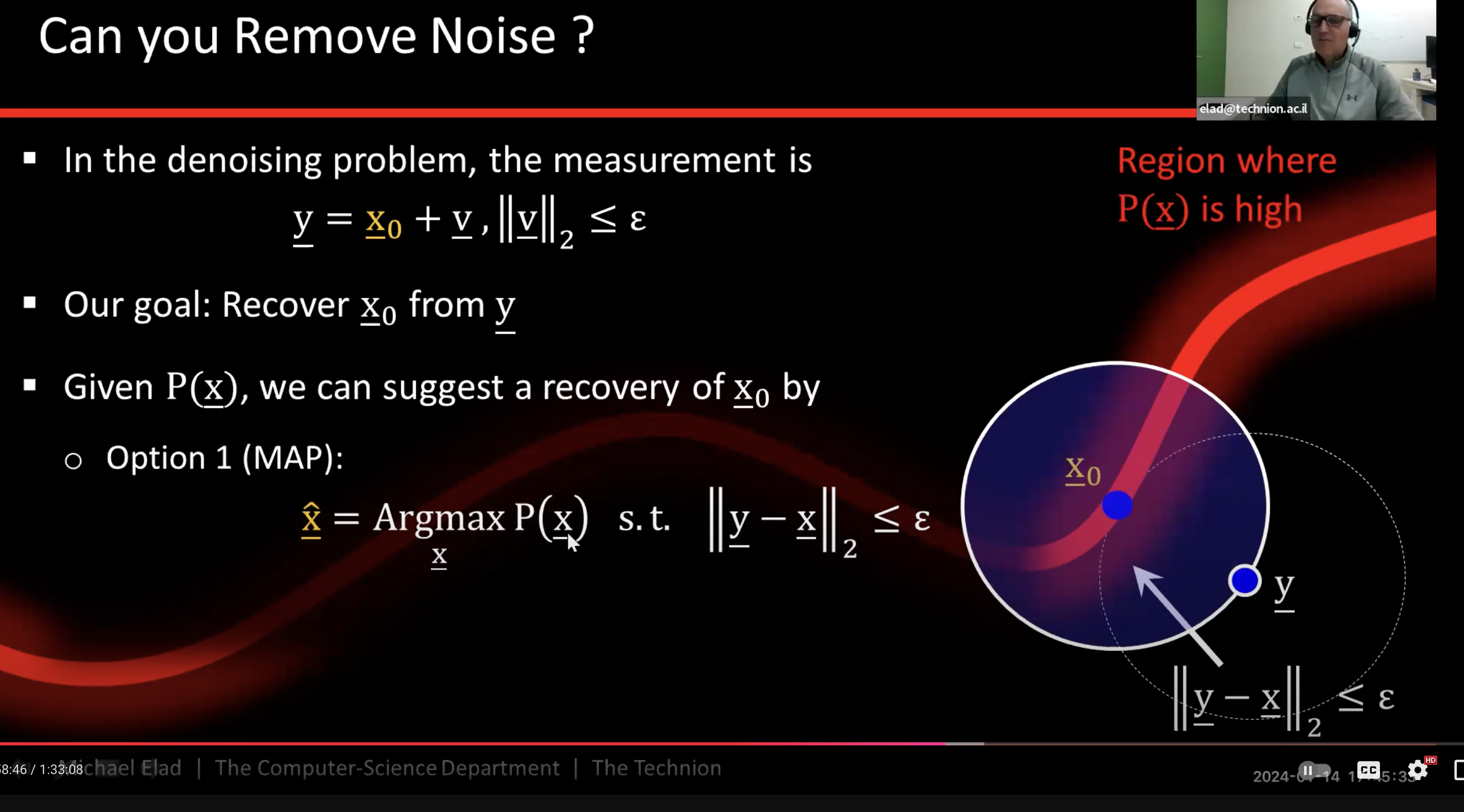

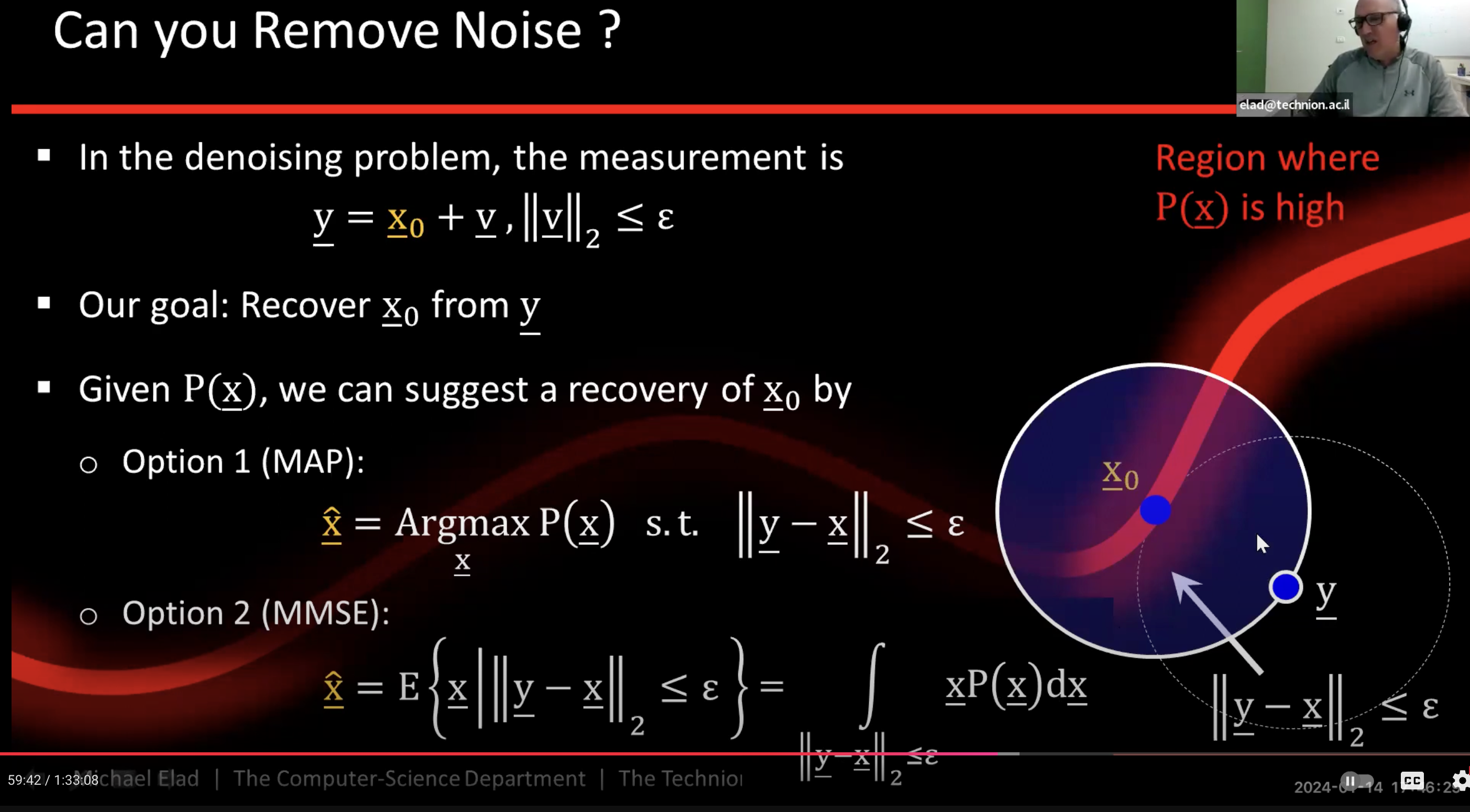

- Why does y lie outside of the manifold?

Cause the manifold has much smaller dimension than the data dimension.

- What is the manifold?

Region where P(X) is high

- How do you remove noise from signal?

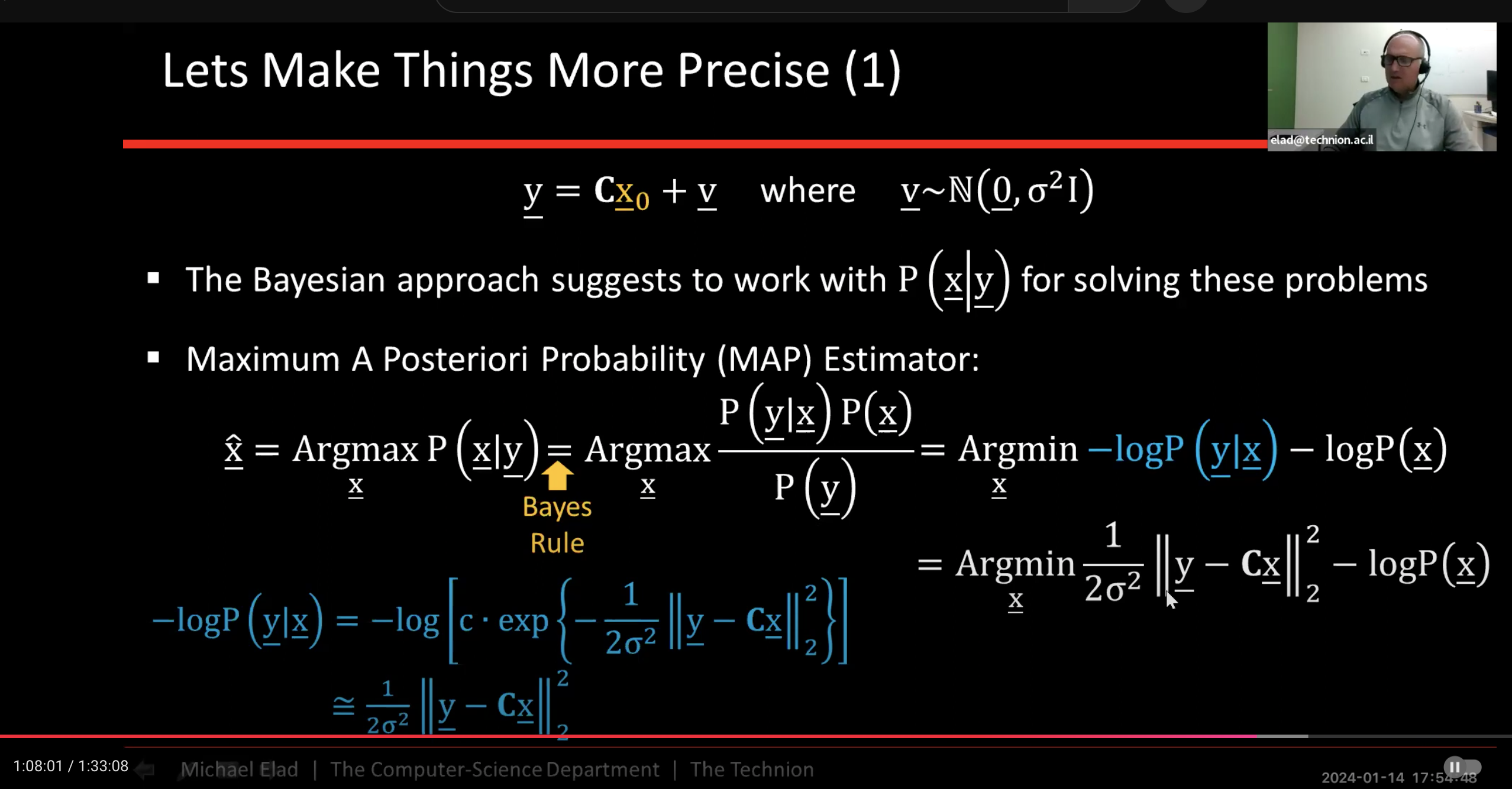

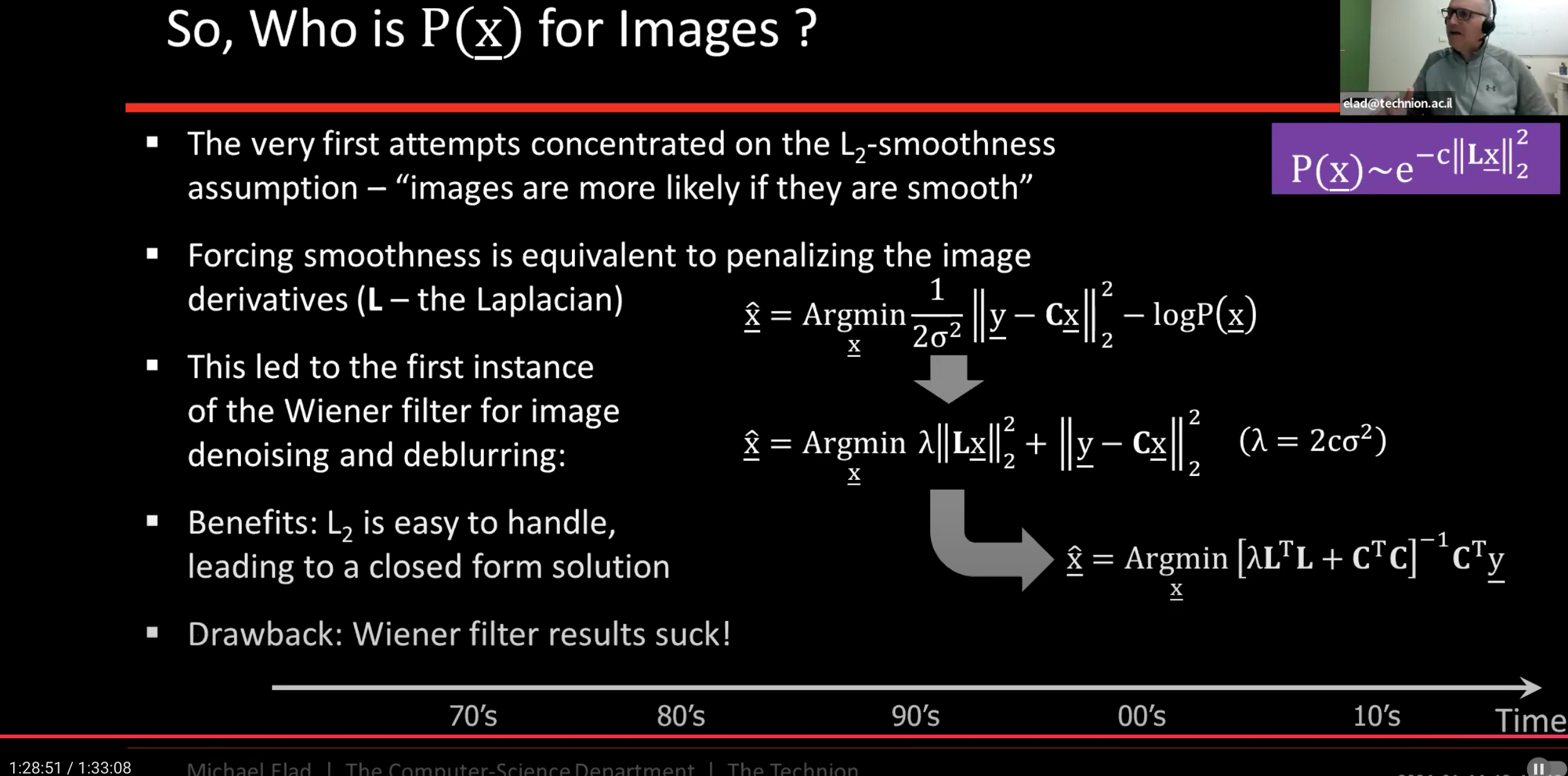

1. MAP: Maximum a Posterior

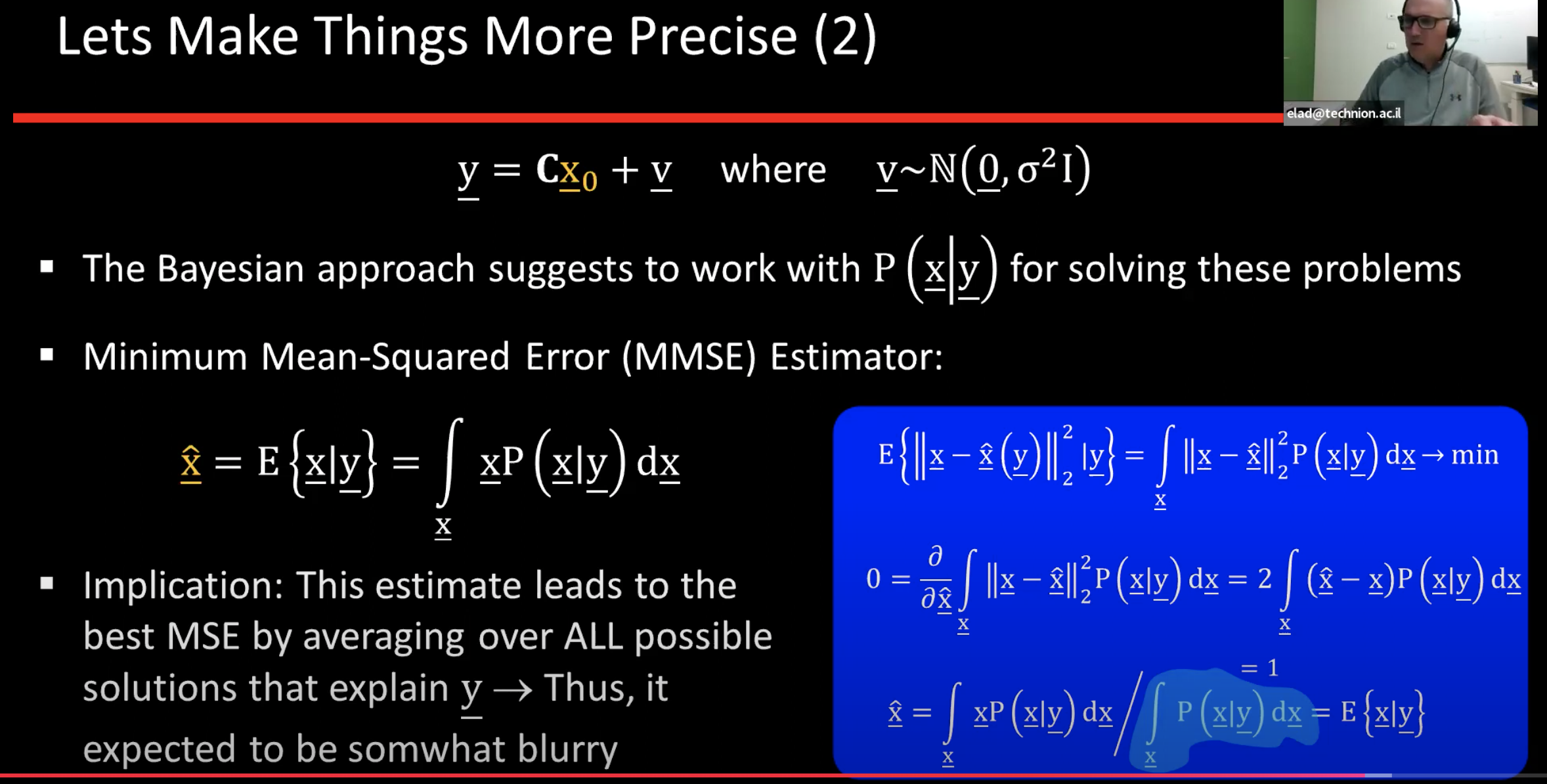

2. MMSE: Minimum mean square error

- Is noise correlated to an image? No. Why?

Noise is generally uncorrelated with an image because it is typically modeled as a random, independent process that does not depend on the image content.

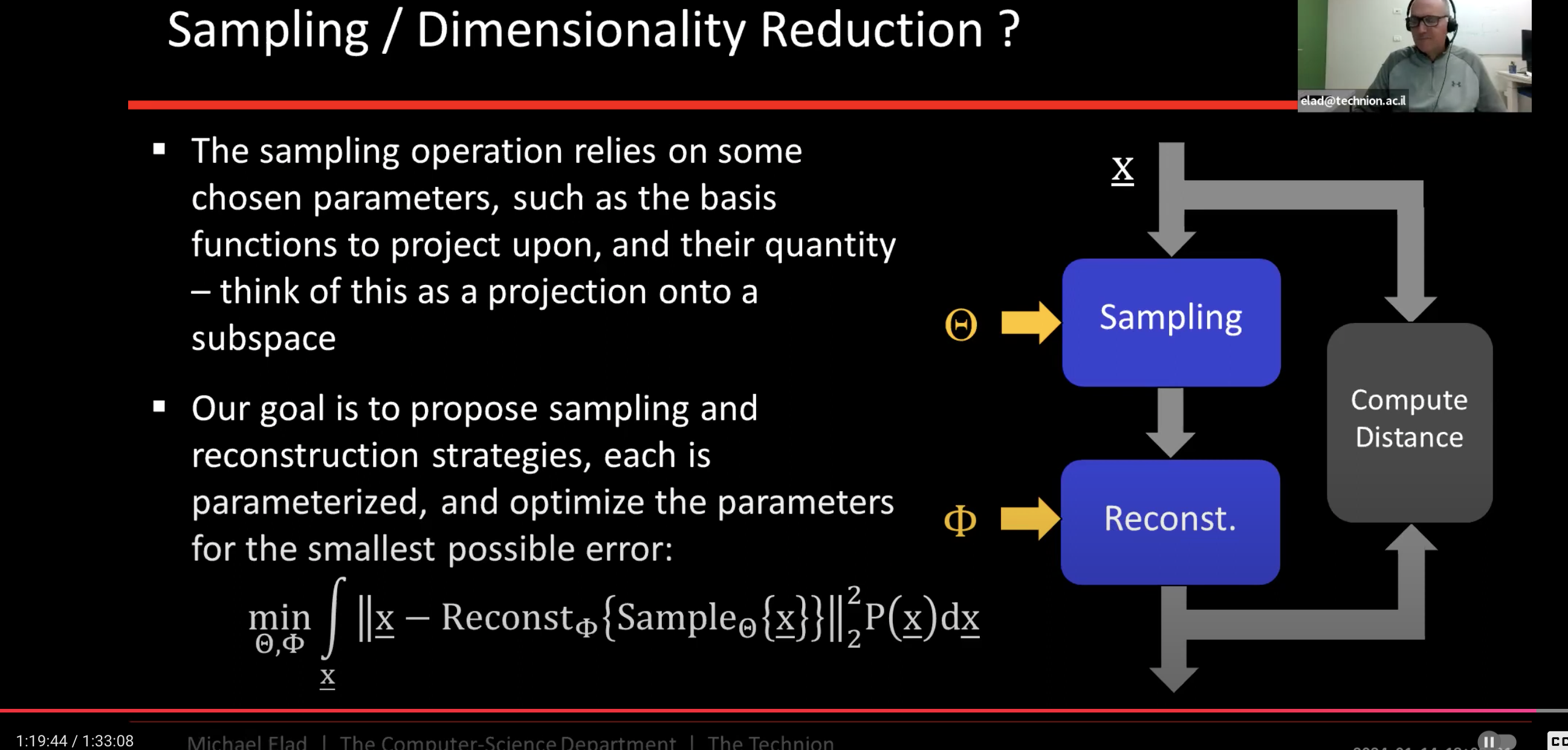

How do we solve large families of inverse problems including linera inverse problems?

Same. If you know P(x), you can solve large families of inverse problem. MAP / MMSE

Does knowing P help compression?

Yes.

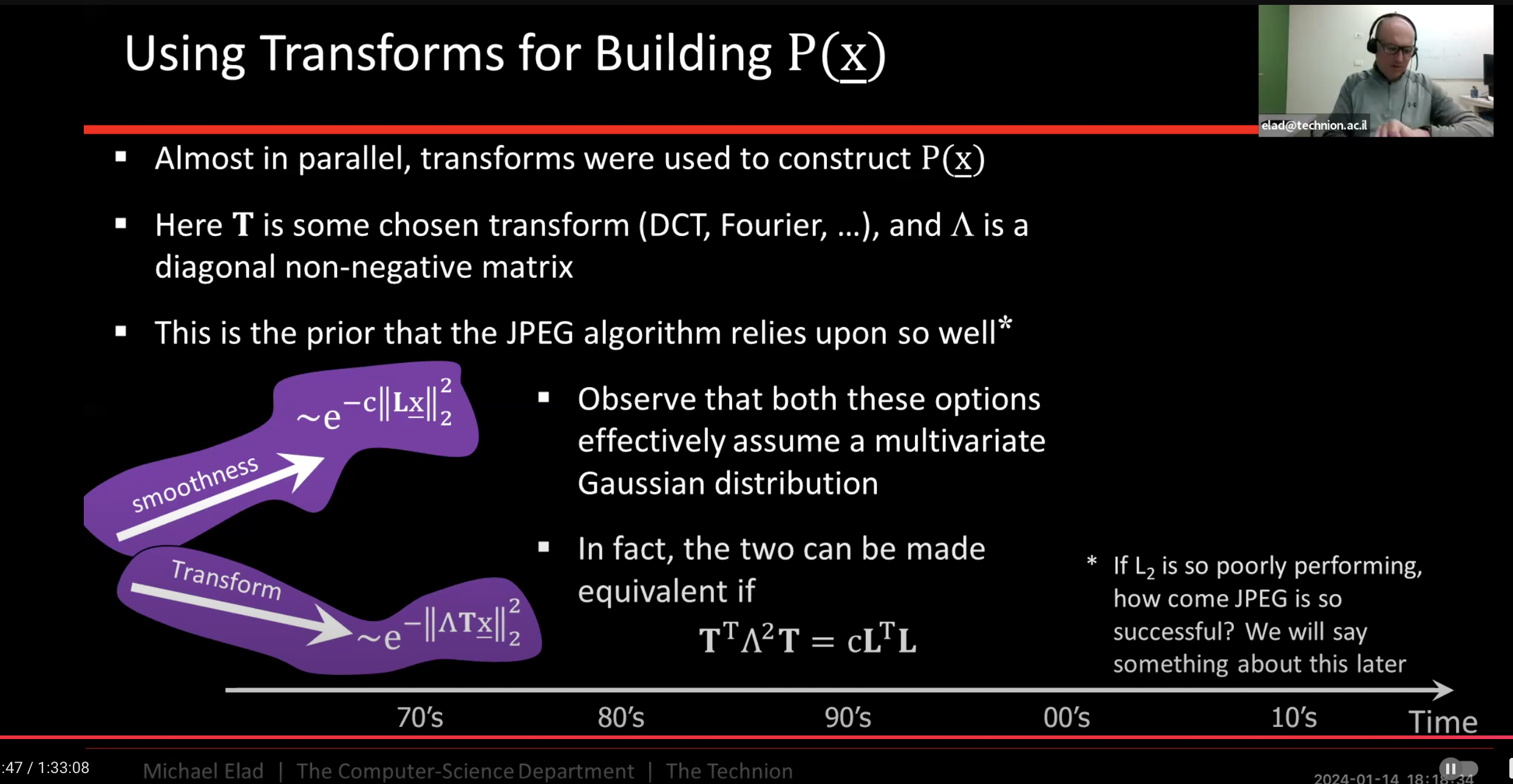

Why do we still use JPEG?

Cause, it doesn't smooth everything like Winer Filter.

Instead, JPEG focuses low frequencies but also serving high frequencies if they exist by the DCT formula. And also it works on patches of an image.

What is DCT?

The Discrete Cosine Transform (DCT) is a mathematical transformation used in JPEG image compression to transform spatial domain image data into the frequency domain. This transformation is a key step in the JPEG compression process, enabling the efficient reduction of image file sizes while maintaining acceptable visual quality.

'Research (연구 관련)' 카테고리의 다른 글

| Inference-Time Techniques for LLM Reasoning (0) | 2025.02.03 |

|---|---|

| Dual Contouring (0) | 2025.01.17 |

| Transformer / Large models (0) | 2024.11.25 |

| mmcv installation (0) | 2024.10.21 |

| Visualizing multiple people in the same world frame (0) | 2024.10.13 |

- Total

- Today

- Yesterday

- 비전

- 피트니스

- 문경식

- Transformation

- world coordinate

- Pose2Mesh

- 인터뷰

- Machine Learning

- 컴퓨터비전

- Docker

- 머신러닝

- nerf

- part segmentation

- demo

- deep learning

- focal length

- nohup

- pytorch

- Generative model

- Virtual Camera

- spin

- Interview

- 에디톨로지

- 컴퓨터비젼

- 헬스

- pyrender

- camera coordinate

- VAE

- densepose

- 2d pose

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |